Ideally shard sizes should be between 10–50 GB, the calculations are done based on desired shard size selection.

Shard size impacts both search latency and write performance, too many small shards will exhaust the memory (JVM Heap), and too few large shards prevent OpenSearch from properly distributing requests.

There is a limit on how many shards a node can handle, it’s useful to check how many shards a node can accommodate, inspect cluster settings documentation.

cluster.max_shards_per_node setting limits the total number of primary and replica shards for the cluster, default is 1000

Set your index shard count to be divisible by your instance count,to ensure a even distribution of shards across the data nodes.

Get sorted view for shards distribution across cluster nodes, using OpenSearch API: GET _cat/shards?v=true&s=store:desc&h=index,node,store

Each shard is a full Lucene Index, and each instance of Lucene is a running process that consumes CPU and memory.

When a shard is involved in an indexing/search request, it uses a CPU to process the request.

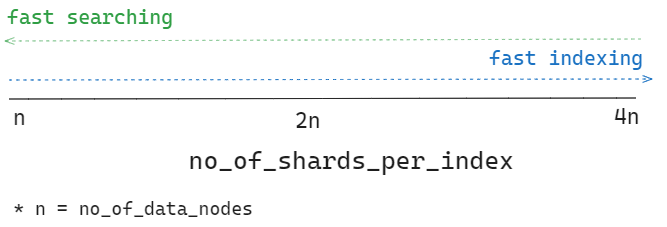

Avoid Oversharding: Each shard you add to an index distributes the processing of requests for that index across an additional CPU. The number of active shards that your domain can support depends on the number of CPUs in the cluster.

A small set of large shards uses fewer resources than many small shards.

🟢 Green: all primary shards and their replicas are allocated to nodes

🟡 Yellow: all primary shards are allocated to nodes, but some replicas aren’t

🔴 Red: at least one primary shard and its replicas are not allocated to any node

📌Unassigned shards cannot be deleted, an unassigned shard is not a corrupted shard, but a missing replica.

To understand why a shard cannot be allocated to a node, use the OpenSearch API: GET /_cluster/allocation/explain.